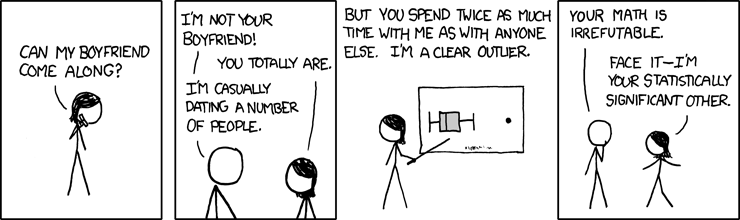

![]() What do this cartoon and the latest edition of PLoS One have in common? Well, reading Bora’s blog this week I saw an article entitled, Risks for Central Nervous System Diseases among Mobile Phone Subscribers: A Danish Retrospective Cohort Study and my ears perked up. We have been mocking the idea that cell phones cause everything from brain cancer to colony collapse disorder and it’s always fun to see what cell phones are being blamed for based on weak associations and correlations.

What do this cartoon and the latest edition of PLoS One have in common? Well, reading Bora’s blog this week I saw an article entitled, Risks for Central Nervous System Diseases among Mobile Phone Subscribers: A Danish Retrospective Cohort Study and my ears perked up. We have been mocking the idea that cell phones cause everything from brain cancer to colony collapse disorder and it’s always fun to see what cell phones are being blamed for based on weak associations and correlations.

In this article the authors identified more than four hundred thousand cell phone subscribers and linked their cell phone use to their medical records in the Danish Hospital Discharge Registry which has collected records of hospitalizations since 1977. They then tried to identify an association between cell phone use and various CNS disorders over the last few decades. These disorders include epilepsy, ALS, vertigo, migraines, MS, Parkinsons and dementia, a broad spectrum of diseases with a variety of pathologies and causes. Basically, they’re fishing. Well, what did they find?

Here’s the summary of the associations they identified:

First the ones they think are significant showing a statistically significant increase in migraine and vertigo among cell phone users. Note the SHR column as the effect they noticed on risk. SHR less than one would be demonstrate an ostensibly “protective” effect or decrease in that disease in the population, and a SHR greater than one would demonstrate a “harmful” or increased correlation between the disease and the cell phone use.

Second, a host of other associations they dismiss as a healthy cohort effect:

Cell phones are correlated with increased vertigo and migraine, but decreased epilepsy, vascular dementia, Alzheimer disease, with no effect on MS or ALS.

As with many such fishing trips for statistically significant correlations they found something, which isn’t that surprising, if you’ve read PLoS, and or our coverage of John Ioannidis’ research. The critical fact to take away from his writings is that if you study anything, anything with statistics you’re going to find statistically significant correlations. In fact if you found nothing that would be strange as you would predict that if you study enough variables, roughly 5% should be statistically significant just by chance, and the nature of the scientific literature and the “file-drawer effect” is that this number goes up as researchers are more likely to publish big effects and “file away” the papers showing no effect even though the results are true.

So, the question that has to be asked isn’t whether or not such correlations can be found, but should we care? After all, what is the physiologic mechanism by which cell phones are increasing migraine or decreasing epilepsy? What is the proposed biological pathway that cell phone radiation is interacting with? Why is there not a dose-response? Longer exposure shows less of an effect which makes even less sense because when phones went from analog to digital, the power output was greatly decreased. So early adopters that got even larger exposures but don’t show worsening migraine or vertigo.

How does any of this, make any scientific sense whatsoever? What does an article like this contribute other than more misinformation dumped carelessly on the public from the over-interpretation of statistical noise? Why PLoS? Why are you making our lives harder?

I’m sad to say that this article in PLoS will be responsible for more such alarmism and nonsense and frankly would not have been published if the editors had been paying attention to, well, PLoS. I might have to go find Isis’ picture of that Teddy bear for this one.

Joachim Schüz, Gunhild Waldemar, Jørgen H. Olsen, Christoffer Johansen (2009). Risks for Central Nervous System Diseases among Mobile Phone Subscribers: A Danish Retrospective Cohort Study PLoS ONE, 4 (2) DOI: 10.1371/journal.pone.0004389

Leave a Reply